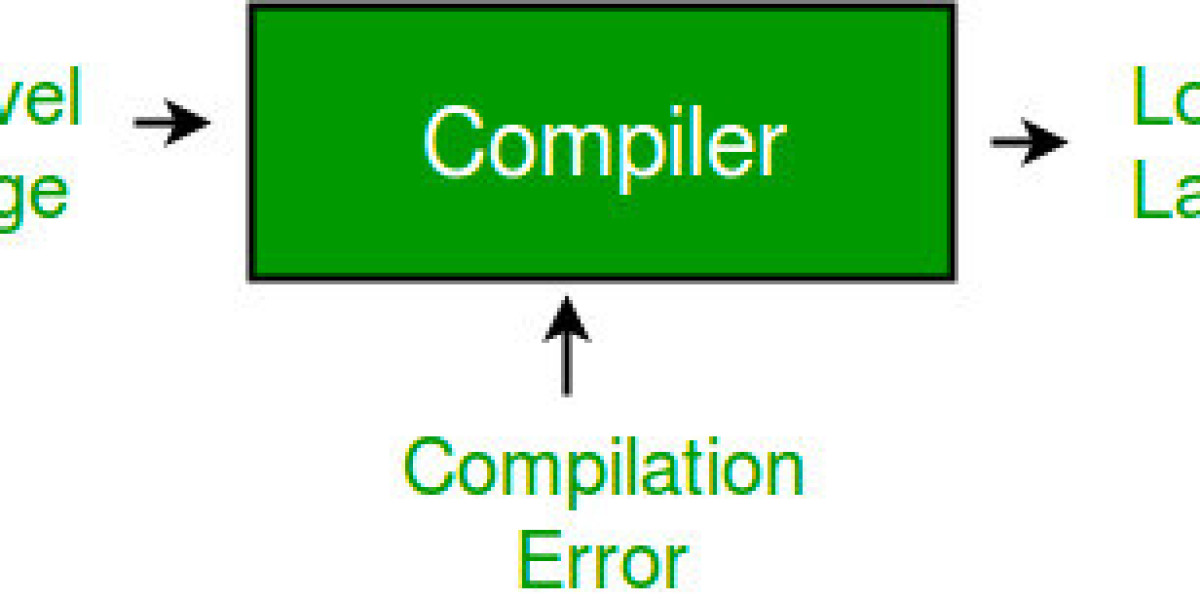

In the field of compiler design, parsing plays a crucial role in converting source code into a format that can be understood and executed by a computer. Parsing involves analyzing the structure of the source code and constructing a parse tree or abstract syntax tree. There are different types of parsing techniques, each with its advantages and trade-offs. In this guide, we will explore these different types of parsing in compiler design and understand their significance in the overall compilation process.

Parsing is a critical phase in the process of compiling source code into executable programs. It involves analyzing the syntax of the code and constructing a parse tree or abstract syntax tree. There are different types of parsing techniques used in compiler design, each with its own strengths and trade-offs. In this exploration, we will delve into the various types of parsing techniques and understand their significance in the field of compiler design.

One commonly used type of parsing is top-down parsing. This technique starts from the root of the parse tree and recursively expands non-terminal symbols based on predefined grammar. Top-down parsers are intuitive to implement and can provide helpful error messages. However, they can suffer from issues like left recursion and excessive backtracking.

Another widely used parsing technique is bottom-up parsing. This approach begins with the input tokens and works its way up the parse tree by applying production rules in a reduced step. Bottom-up parsers, such as shift-reduce parsing can handle a broader class of grammars and are more efficient in general. However, they require additional mechanisms like conflict resolution to handle ambiguous or conflicting grammar rules.

LL parsing is a type of top-down parsing that uses a lookahead symbol to decide which production rule to apply. It is commonly used for programming languages and is efficient for LL(1) grammars, where the next production can be determined by looking only one token ahead. LL parsers are relatively easy to construct and understand, making them popular in practice.

On the other hand, LR parsing is a type of bottom-up parsing that uses a lookahead symbol to determine when to reduce. LR parsers can handle a wider range of grammars, including left-recursive and ambiguous grammars. They employ more complex parsing algorithms such as LR(0), SLR(1), LALR(1), or LR(1), which require constructing parsing tables based on the grammar rules.

In conclusion, exploring the different types of parsing techniques in compiler design provides us with a deeper understanding of how programming languages are processed and transformed into executable code. Each parsing technique has its own strengths and weaknesses, and the choice of parsing technique depends on factors such as the language's grammar complexity, efficiency requirements, and error-handling capabilities. By familiarizing ourselves with these parsing techniques, we can build efficient compilers that accurately analyze and interpret programming languages, paving the way for reliable and high-performance software systems.

Parsing plays a crucial role in the field of compiler design and offers several advantages. Here are some key advantages of parsing in compiler design:

- Syntax Analysis: Parsing helps in analyzing the syntax of a programming language by ensuring that the source code adheres to the specified grammar rules. It helps identify and handle syntax errors, ensuring that the code is well-formed and can be further processed.

- Structure Understanding: Parsing constructs a hierarchical representation of the source code, such as a parse tree or abstract syntax tree (AST). This representation helps in understanding the structure of the program, including the relationships between different elements such as statements, expressions, and declarations.

- Semantic Analysis: During the parsing process, semantic information can be extracted and used for further analysis. This includes type checking, symbol table construction, and resolving references to variables, functions, and other entities. Semantic analysis ensures that the code follows the rules and constraints of the programming language.

- Compiler Optimization: Parsing provides a foundation for various compiler optimization techniques. The hierarchical representation obtained through parsing allows the compiler to perform optimizations such as dead code elimination, constant folding, loop transformations, and register allocation. These optimizations improve the efficiency and performance of the compiled code.

- Language Extension: Parsing facilitates language extension by allowing the addition of new syntax and semantics to an existing programming language. By extending the grammar and updating the parsing process, new language constructs and features can be introduced, providing enhanced expressiveness and flexibility.

Understanding the different types of parsing in compiler design is essential for building efficient and robust compilers. We have explored the four main types of parsing techniques: top-down parsing, bottom-up parsing, LL parsing, and LR parsing. Each technique has its own strengths and is suitable for different scenarios.

Top-down parsing is a recursive descent approach that starts from the root of the parse tree and works towards the leaves. It is intuitive and easy to implement but may suffer from left recursion and backtracking issues.

Bottom-up parsing, on the other hand, starts from the leaves and builds the parse tree by reducing the input based on a set of grammar rules. It is more powerful and can handle a broader class of grammars, but it requires additional mechanisms such as shift-reduce and reduce-reduce conflict resolution.

LL parsing is a top-down parsing technique that uses a lookahead symbol to decide which production rule to apply. It is widely used for programming languages and is efficient for LL(1) grammars. It is also compatible with left-factored grammars.

LR parsing is a bottom-up technique that uses a lookahead symbol to decide when to reduce. It can handle a wider class of grammars, including left-recursive and ambiguous grammars, but it requires more complex parsing algorithms such as LR(0), SLR(1), LALR(1), or LR(1).

By exploring these different parsing techniques, you have gained insights into their strengths, limitations, and use cases. Remember that the choice of parsing technique depends on factors such as the grammar of the language, the efficiency requirements, and the complexity of the parsing process.

As a compiler designer, it is crucial to evaluate the requirements of your specific language and select the appropriate parsing technique like shift-reduce parsing. Continuously improving your understanding of parsing techniques and staying updated with advancements in compiler design will enable you to build efficient and reliable compilers that can handle a wide range of programming languages.